From the two-dimensional disk we are moving to three-dimensional space-time. We will meet Einstein-Poincare-Minkowski special relativity, though in a baby version, with x and y, but without z in space. It is not too bad, because the famous Lorentz transformations, with length contraction and time dilation happen already in two-dimensional space-time, with x and t alone. We will discover Lorentz transformations today. First in disguise, but then we will unmask them.

First we recall, from The disk and the hyperbolic model, the relation between the coordinates ![]() on the Poincare disk

on the Poincare disk ![]() , and

, and ![]() on the unit hyperboloid

on the unit hyperboloid ![]()

(1)

(2)

We have the group SU(1,1) acting on the disk with fractional linear transformations. With ![]() and

and ![]() in SU(1,1)

in SU(1,1)

(3) ![]()

the fractional linear action is

(4) ![]()

By the way, we know from previous notes that ![]() is in SU(1,1) if and only if

is in SU(1,1) if and only if

(5) ![]()

Having the new point on the disk, with coordinates ![]() we can use Eq. (1) to calculate the new space-time point coordinates

we can use Eq. (1) to calculate the new space-time point coordinates ![]() This is what we will do now. We will see that even if

This is what we will do now. We will see that even if ![]() depends on

depends on ![]() in a nonlinear way, the space-time coordinates transform linearly. We will calculate the transformation matrix

in a nonlinear way, the space-time coordinates transform linearly. We will calculate the transformation matrix ![]() and express it in terms of

and express it in terms of ![]() and

and ![]() We will also check that this is a matrix in the group SO(1,2).

We will also check that this is a matrix in the group SO(1,2).

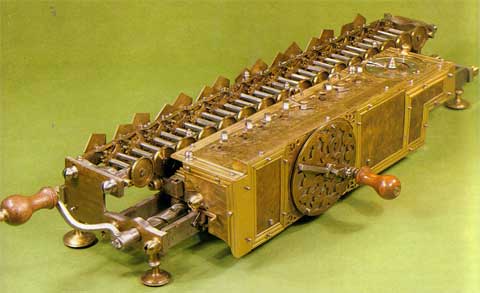

The program above involves algebraic calculations. Doing them by hand is not a good idea. Let me recall a quote from Gottfried Leibniz, who, according to Wikipedia

He became one of the most prolific inventors in the field of mechanical calculators. While working on adding automatic multiplication and division to Pascal’s calculator, he was the first to describe a pinwheel calculator in 1685[13] and invented the Leibniz wheel, used in the arithmometer, the first mass-produced mechanical calculator. He also refined the binary number system, which is the foundation of virtually all digital computers.

“It is unworthy of excellent men to lose hours like slaves in the labor of calculation which could be relegated to anyone else if machines were used.”

— Gottfried Leibniz

I used Mathematica as my machine. The same calculations can be certainly done with Maple, or with free software like Reduce or Maxima. For those interested, the code that I used, and the results can be reviewed as a separate HTML document: From SU(1,1) to Lorentz.

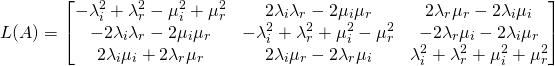

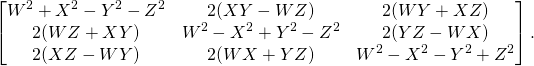

Here I will provide only the results. It is important to notice that while the matrix ![]() has complex entries, the matrix

has complex entries, the matrix ![]() is real. The entries of

is real. The entries of ![]() depend on real and imaginary parts of

depend on real and imaginary parts of ![]() and

and ![]()

(6) ![]()

Here is the calculated result for ![]() :

:

(7)

In From SU(1,1) to Lorentz it is first verified that the matrix ![]() is of determinant 1. Then it is verified that it preserves the Minkowski space-time metric. With

is of determinant 1. Then it is verified that it preserves the Minkowski space-time metric. With ![]() defined as

defined as

(8)

we have

(9) ![]()

Since ![]() the transformation

the transformation ![]() preserves the time direction. Thus

preserves the time direction. Thus ![]() is an element of the proper Lorentz group

is an element of the proper Lorentz group ![]() .

.

Remark: Of course we could have chosen ![]() with

with ![]() on the diagonal. We would have the group SO(2,1), and we would write the hyperboloid as

on the diagonal. We would have the group SO(2,1), and we would write the hyperboloid as ![]() It is a question of convention.

It is a question of convention.

In SU(1,1) straight lines on the disk we considered three one-parameter subgroups of SU(1,1):

(10)

(11)

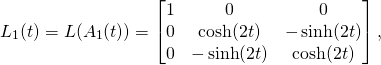

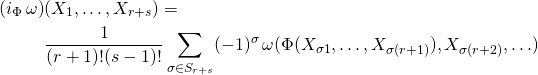

We can now use Eq. (7) in order to see which space-time transformations they implement. Again I calculated it obeying Leibniz and using a machine (see From SU(1,1) to Lorentz).

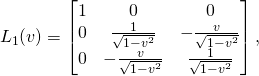

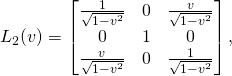

Here are the results of the machine work:

(12)

(13)

(14)

The third family is a simple Euclidean rotation in the ![]() plane. That is why I denoted the parameter with the letter

plane. That is why I denoted the parameter with the letter ![]() In order to “decode” the first two one-parameter subgroups it is convenient to introduce new variable

In order to “decode” the first two one-parameter subgroups it is convenient to introduce new variable ![]() and set

and set ![]() The group property

The group property ![]() is then lost, but the matrices become evidently those of special Lorentz transformations,

is then lost, but the matrices become evidently those of special Lorentz transformations, ![]() transforming

transforming ![]() and

and ![]() , leaving

, leaving ![]() unchanged, and

unchanged, and ![]() transforming

transforming ![]() and leaving

and leaving ![]() unchanged (though with a different sign of

unchanged (though with a different sign of ![]() ). Taking into account the identities

). Taking into account the identities

(15)

we get

(16)

(17)

In the following posts we will use the relativistic Minkowski space distance on the hyperboloid for finding the distance formula on the Poincare disk.

![Rendered by QuickLaTeX.com \begin{eqnarray*} [\alpha\otimes X,\,\beta\otimes Y]&=&(\alpha\wedge\beta)\otimes [X,Y]\\&+&(\alpha\wedge\mathfrak{L}_X\beta)\otimes Y\\&-&(\mathfrak{L}_Y\alpha\wedge\beta)\otimes X\\&+&(-1)^r(d\alpha\wedge i_X\beta)\otimes Y\\&+&(-1)^r(i_Y\alpha\wedge d\beta)\otimes X\end{eqnarray*}](http://arkadiusz-jadczyk.eu/blog/wp-content/ql-cache/quicklatex.com-cca79950ca46de83396919871771a256_l3.png)

![Rendered by QuickLaTeX.com \begin{eqnarray*} [\Phi,\Psi]&=&\left(\Phi^C_{B_1\ldots B_r}\partial_C\Psi^A_{B_{r+1}\ldots B_{r+s}}\right.\\ &-&(-1)^{rs}\Psi^C_{B_1\ldots B_s}\partial_C\Phi^A_{B_{s+1}\ldots B_{r+s}}\\ & -&r\Phi^A_{B_1\ldots B_{r-1} C}\partial_{B_r}\Psi^C_{B_{r+1}\ldots B_{r+s}}\\ &+&(-1)^{rs}s\Psi^A_{CB_{1}\ldots B_{s-1}}\partial_{B_{s}}\Phi^C_{B_{s+1}\ldots B_{r+s}}\left.\right)\,d^B\otimes\partial_A\end{eqnarray*}](http://arkadiusz-jadczyk.eu/blog/wp-content/ql-cache/quicklatex.com-ded0d145d5e75ea30179e72700135a1a_l3.png)

=\\ &=\frac{1}{r!s!}\sum_\sigma (-1)^\sigma [\Phi(X_{\sigma 1}\ldots X_{\sigma r}),\Psi(X_{\sigma(r+1)}\ldots X_{\sigma(r+s)})] \\ &+(-1)^r\left( \frac{1}{r!(s-1)!}\sum_\sigma (-1)^\sigma\Psi([X_{\sigma1},\Phi(X_{\sigma2},\ldots ,X_{\sigma (r+1)})],X_{\sigma (r+2)},\ldots)\right.\\ &\left.-\frac{1}{(r-1)!(s-1)!2!}\sum_\sigma(-1)^\sigma\Psi(\Phi([X_{\sigma 1},X_{\sigma 2}],X_{\sigma 3},\ldots),X_{\sigma (r+2)},\ldots) \right)\\ &-(-1)^{rs+s}\left(\frac{1}{(r-1)!s!}\sum_\sigma (-1)^\sigma\Phi([X_{\sigma 1},\Psi(X_{\sigma 2},\ldots ,X_{\sigma (s+1)})],X_{\sigma (s+2)},\ldots)\right .\\ &-\left.\frac{1}{(r-1)!(s-1)!2!}\sum_\sigma(-1)^\sigma\Phi(\Psi([X_{\sigma 1},X_{\sigma 2}],X_{\sigma 3},\ldots ),,X_{\sigma (s+2)},\ldots )\right) \end{align*}](http://arkadiusz-jadczyk.eu/blog/wp-content/ql-cache/quicklatex.com-c36072c16dd55156312db062e8c0b207_l3.png)

![Rendered by QuickLaTeX.com \begin{align*} ( &\mathfrak{L}_{\Phi}\,\omega)(X_1,\ldots ,X_{r+s})=\\ &=\frac{1}{r!s!}\,\sum_\sigma\,(-1)^\sigma \mathfrak{L}_{\Phi (X_{\sigma 1},\ldots ,X_{\sigma r})}(\omega(X_{\sigma (r+1)},\ldots,X_{\sigma (r+s)}))\\ &+(-1)^r\left(\frac{1}{r!(s-1)!}\sum_\sigma (-1)^\sigma\,\omega([X_{\sigma 1},\Phi(X_{\sigma 2},\ldots,X_{\sigma (r+1)})],X_{\sigma (r+2)},\ldots) \right.\\ &\left.-\frac{1}{(r-1)!(s-1)!2!}\sum_\sigma\,(-1)^\sigma\,\omega(\Phi([X_{\sigma 1},X_{\sigma 2}],X_{\sigma 3},\ldots),X_{\sigma (r+2)},\ldots)\right) \end{align*}](http://arkadiusz-jadczyk.eu/blog/wp-content/ql-cache/quicklatex.com-ce69b6b437517df16864effcb4816908_l3.png)