In Nobody understands quantum mechanics, but spin is fun we met three Pauli matrices ![]() . They are kinda OK, but they are not quite fitting our purpose. Therefore I will replace them with another set of matrices, and I will call these matrices

. They are kinda OK, but they are not quite fitting our purpose. Therefore I will replace them with another set of matrices, and I will call these matrices ![]() They are defined the same way as Pauli matrices, except that

They are defined the same way as Pauli matrices, except that ![]() has a different sign:

has a different sign:

The Pauli’s matrices ![]() would have in Eq. (4) plus signs on the right. We want minus signs there. Why? Soon we will see why. But, perhaps, I should mention it now, that when Pauli is around many things go not quite right. That is the famous “Pauli effect“. From Wikipedia article Pauli Effect:

would have in Eq. (4) plus signs on the right. We want minus signs there. Why? Soon we will see why. But, perhaps, I should mention it now, that when Pauli is around many things go not quite right. That is the famous “Pauli effect“. From Wikipedia article Pauli Effect:

The Pauli effect is a term referring to the apparently mysterious, anecdotal failure of technical equipment in the presence of Austrian theoretical physicist Wolfgang Pauli. The term was coined using his name after numerous instances in which demonstrations involving equipment suffered technical problems only when he was present.

One example:

In 1934, Pauli saw a failure of his car during a honeymoon tour with his second wife as proof of a real Pauli effect since it occurred without an obvious external cause.

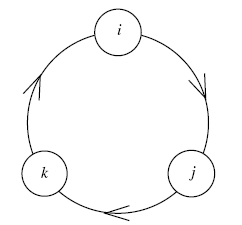

You can find more examples in the Wikipedia article. The car breakdown during a honeymoon with Pauli’s second wife is somehow related to the change of the sign of the second matrix above. And now it is easy to relate our second set of matrices to quaternions. It was Sir William Hamilton who introduced quaternions in 1843. There were three imaginary units ![]() satisfying:

satisfying:

(5) ![]()

(6) ![]()

Hamilton, when he invented quaternions, he thought of them as of abstract objects obeying simple algebra rules. But now we can realize them as complex matrices. To this end it is enough to define

(7) ![]()

and notice that the algebraic relations defining the multiplication table of quaternions are automatically satisfied! If we would have used Pauli matrices ![]() we would have to use

we would have to use ![]() in the above equations – which is not so nice. You would have asked: why minus rather than plus?

in the above equations – which is not so nice. You would have asked: why minus rather than plus?

Some care is needed, however. One should distinguish the bold ![]() – the first unit quaternion, from the imaginary complex number

– the first unit quaternion, from the imaginary complex number ![]() One should also note that the squares of the three imaginary quaternionic units, in their matrix realization, are

One should also note that the squares of the three imaginary quaternionic units, in their matrix realization, are ![]() that is “minus identity matrix”, instead of just number

that is “minus identity matrix”, instead of just number ![]() as in Hamilton’s definition.

as in Hamilton’s definition.

In the matrix realization we also have:

(8) ![]()

where the star ![]() denotes Hermitian conjugation.

denotes Hermitian conjugation.

A general quaternion ![]() is a sum:

is a sum:

![]()

In matrix realization it is represented by the matrix

(9) ![]()

The conjugated quaternion, ![]() is represented by Hermitian conjugated matrix. Notice that

is represented by Hermitian conjugated matrix. Notice that ![]() Unit quaternions are quaternions that have the property that

Unit quaternions are quaternions that have the property that ![]() They are represented by matrices

They are represented by matrices ![]() such that

such that ![]() that is by unitary matrices. Moreover we can check that then

that is by unitary matrices. Moreover we can check that then

![]()

Therefore matrices representing unit quaternions are unitary of determinant one. Such matrices form a group, the special unitary group ![]() Yet, to dot the i’s and cross the t’s, we still need to show that every

Yet, to dot the i’s and cross the t’s, we still need to show that every ![]() unitary matrix of determinant one is of the form (9). Let therefore

unitary matrix of determinant one is of the form (9). Let therefore ![]() be such a matrix

be such a matrix

![]()

Since ![]() we can easily check that

we can easily check that

![]()

Therefore

![]()

On the other hand ![]() is unitary, that means

is unitary, that means ![]() But

But

![]()

Therefore we must have

![]()

That is ![]() Writing

Writing ![]() we get the form (9).

we get the form (9).

We are interested in rotations in our 3D space. Quantum mechanics suggests using matrices from the group ![]() We now know that they are unit quaternions in disguise. But, on the other hand, perhaps quaternions are matrices from

We now know that they are unit quaternions in disguise. But, on the other hand, perhaps quaternions are matrices from ![]() in disguise? When Hamilton was inventing quaternions he did not know that he was inventing something that will be useful for rotations. He simply wanted to invent “the algebra of space”. Maxwell first tried to apply quaternions in electrodynamics (for

in disguise? When Hamilton was inventing quaternions he did not know that he was inventing something that will be useful for rotations. He simply wanted to invent “the algebra of space”. Maxwell first tried to apply quaternions in electrodynamics (for ![]() and

and ![]() operators), but that was pretty soon abandoned. Mathematicians at that time were complaining that (see Addendum to the Life of Sir William Rowan Hamilton, LL.D, D.C.L. On Sir W. R. Hamilton’s Irish Descent. On the Calculus of Quaternions. Robert Perceval Graves Dublin: Hodges Figgis, and Co., 1891. ):

operators), but that was pretty soon abandoned. Mathematicians at that time were complaining that (see Addendum to the Life of Sir William Rowan Hamilton, LL.D, D.C.L. On Sir W. R. Hamilton’s Irish Descent. On the Calculus of Quaternions. Robert Perceval Graves Dublin: Hodges Figgis, and Co., 1891. ):

As a whole the method is pronounced by most mathematicians to be neither easy nor attractive, the interpretation being hazy or metaphysical and seldom clear and precise.’

Nowadays quaternions are best understood in the framework of Clifford algebras. Unit quaternions are just one example of what are called “spin groups”. We do not need such a general framework here. We will be quite happy with just quaternions and the group ![]() But we still have to relate the matrices

But we still have to relate the matrices ![]() from

from ![]() to rotations, and to learn how to use them. Perhaps I will mention it already here, at this point, that the idea is to consider the rotations as points of a certain space. We want to learn about geometry of this space, and we want to investigate different curves in this space. Some of these curves describe rotations and flips of an asymmetric spinning top. Can they be interpreted as “free fall” in this space under some force of gravitation, when gravitation is related to inertia? Perhaps this way we will be able to get a glimpse into otherwise mysterious connection of gravity to quantum mechanics? These questions may sound like somewhat hazy and metaphysical. Therefore let us turn to a good old algebra.

to rotations, and to learn how to use them. Perhaps I will mention it already here, at this point, that the idea is to consider the rotations as points of a certain space. We want to learn about geometry of this space, and we want to investigate different curves in this space. Some of these curves describe rotations and flips of an asymmetric spinning top. Can they be interpreted as “free fall” in this space under some force of gravitation, when gravitation is related to inertia? Perhaps this way we will be able to get a glimpse into otherwise mysterious connection of gravity to quantum mechanics? These questions may sound like somewhat hazy and metaphysical. Therefore let us turn to a good old algebra.

With every vector ![]() in our 3D space we can associate Hermitian matrix

in our 3D space we can associate Hermitian matrix ![]() of trace zero:

of trace zero:

(10) ![]()

In fact, as it is very easy to see, every Hermitian matrix of trace zero is of this form. Notice that

(11) ![]()

Let ![]() be a unitary matrix. We act with unitary matrices on vectors using the “jaw operation” – we take the matrix representation

be a unitary matrix. We act with unitary matrices on vectors using the “jaw operation” – we take the matrix representation ![]() of the vector in the jaws:

of the vector in the jaws:

![]()

Now, since ![]() is Hermitian and

is Hermitian and ![]() is unitary,

is unitary, ![]() is also Hermitian. Since

is also Hermitian. Since ![]() is of trace zero and

is of trace zero and ![]() is unitary,

is unitary, ![]() is also of trace zero. Therefore there exists vector

is also of trace zero. Therefore there exists vector ![]() such that:

such that:

![]()

If ![]() , then

, then ![]() Therefore we must have

Therefore we must have ![]() In other words the transformation (which is linear)

In other words the transformation (which is linear) ![]() is an isometry – it preserves the length of all vectors. And it is known that every isometry (that maps

is an isometry – it preserves the length of all vectors. And it is known that every isometry (that maps ![]() into

into ![]() , which is the case) is a rotation (see Wikipedia, Isometry). Therefore there exists a unique rotation matrix R such that

, which is the case) is a rotation (see Wikipedia, Isometry). Therefore there exists a unique rotation matrix R such that ![]() This way we have a map from

This way we have a map from ![]() to

to ![]() – the group of orthogonal matrices. We do not know yet if

– the group of orthogonal matrices. We do not know yet if ![]() which is the case, but it needs a proof, some proof….

which is the case, but it needs a proof, some proof….